25 KiB

25 KiB

- #CT213 - Computer Systems & Organisation

- Previous Topic: CPU Management - Scheduling

- Next Topic: Memory Management

- Relevant Slides:

-

Concurrent Programming

collapsed:: true- What are Concurrent Programs? #card

card-last-interval:: 4

card-repeats:: 2

card-ease-factor:: 2.22

card-next-schedule:: 2022-11-21T09:47:57.127Z

card-last-reviewed:: 2022-11-17T09:47:57.127Z

card-last-score:: 3

- Concurrent Programs are interleaving sets of sequential atomic instructions.

- i.e., interacting sequential processes that run at the same time, on the same or different processors.

- Processes are interleaved - at any time, each processor runs an instruction of the sequential processes.

- Concurrent Programs are interleaving sets of sequential atomic instructions.

-

Correctness

- Generalisation: A program will be correct if its preconditions hold, as then its post conditions will also hold.

- A concurrent program must be correct under ^^all possible interleavings.^^

- If all the maths is done in registers, then the results will depend on interleaving (indeterminate calculation).

- This dependency on unforeseen circumstances is known as a Race Condition.

- What is a Race Condition? #card

card-last-interval:: 0.88

card-repeats:: 2

card-ease-factor:: 2.36

card-next-schedule:: 2022-11-18T06:49:18.142Z

card-last-reviewed:: 2022-11-17T09:49:18.143Z

card-last-score:: 3

- A Race Condition occurs when a program output is dependent on the sequence or timing of code execution.

- If multiple processes of execution enter a critical section at about the same time, both will attempt to update the shared data structure.

- This will lead to surprising & undesirable results.

- You must work to avoid this with concurrent code.

- If we get different results every time we run some code, the result is indeterminate.

- What is a Critical Section? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.36

card-next-schedule:: 2022-11-18T00:00:00.000Z

card-last-reviewed:: 2022-11-17T09:47:41.294Z

card-last-score:: 1

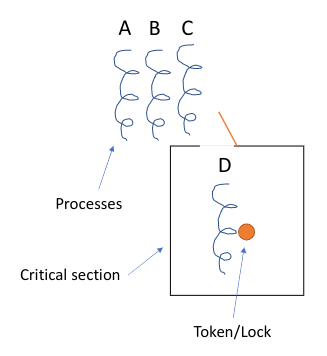

- A critical section is a part of a program where a shared resource is accessed.

- A critical section must be protected in ways that avoid concurrent access.

- A critical section is a part of a program where a shared resource is accessed.

- What are Deterministic Computations? #card

card-last-interval:: 0.98

card-repeats:: 2

card-ease-factor:: 2.36

card-next-schedule:: 2022-11-15T19:07:48.411Z

card-last-reviewed:: 2022-11-14T20:07:48.412Z

card-last-score:: 3

- Deterministic Computations have the same result each time.

- We want deterministic concurrent code.

- We can use synchronisation mechanisms.

- Deterministic Computations have the same result each time.

-

Handling Race Conditions

- We need a mechanism to control access to shared resources in concurrent code - Synchronisation is necessary for any shared data structure.

- The idea is to focus on the critical sections of the code, i.e., sections that access shared resources.

- We want critical sections to run with mutual exclusion - only one process should be able to execute that code at the same time.

-

Critical Section Properties #card

card-last-interval:: 0.98 card-repeats:: 2 card-ease-factor:: 2.36 card-next-schedule:: 2022-11-24T11:17:06.949Z card-last-reviewed:: 2022-11-23T12:17:06.950Z card-last-score:: 3- Mutual Exclusion: Only one process can access the critical section at a time.

- Guarantee of Progress: Processes outside the critical section cannot stop another from entering it.

- Bounded Waiting: A process waiting to enter the critical section will eventually enter.

- Processes in the critical section will eventually leave.

- Performance: The overhead of entering / exiting should be small.

- Fair: Don't make certain processes wait much longer than others.

-

Atomicity #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-18T00:00:00.000Z card-last-reviewed:: 2022-11-17T09:48:51.808Z card-last-score:: 1- Basic atomicity is provided by the hardware.

- E.g., References & Assignments (i.e., read & write operations) are atomic in all CPUs.

- However, higher-level constructs (i.e., any sequence of two or more CPU instructions) are not atomic in general.

- Some languages (e.g., Java) have mechanisms to specify multiple instructions as atomic.

- Basic atomicity is provided by the hardware.

-

Conditional Synchronisation

- What are Concurrent Programs? #card

card-last-interval:: 4

card-repeats:: 2

card-ease-factor:: 2.22

card-next-schedule:: 2022-11-21T09:47:57.127Z

card-last-reviewed:: 2022-11-17T09:47:57.127Z

card-last-score:: 3

-

Mutual Exclusion Solutions

collapsed:: true-

Locks

collapsed:: true- What is a lock? #card card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-16T00:00:00.000Z card-last-reviewed:: 2022-11-15T18:42:37.491Z card-last-score:: 1

-

Lock States & Operation #card

card-last-interval:: 0.95 card-repeats:: 2 card-ease-factor:: 2.36 card-next-schedule:: 2022-11-22T11:06:37.168Z card-last-reviewed:: 2022-11-21T13:06:37.168Z card-last-score:: 3- Locks have 2 states:

- Held: Some process is in the critical section.

- Not Held: No process is in the critical section.

- Locks have 2 operations:

- Acquire: Mark lock as held or wait until released. If not held, this is executed immediately.

- If many processes call acquire, only one process can get the lock.

- Release: Mark lock as not held.

- Acquire: Mark lock as held or wait until released. If not held, this is executed immediately.

- Locks have 2 states:

-

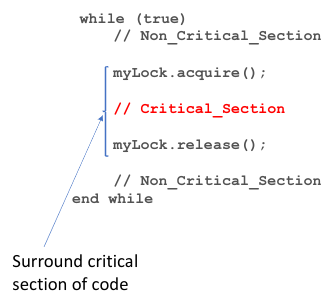

Using Locks #card

card-last-interval:: 2.8 card-repeats:: 2 card-ease-factor:: 2.6 card-next-schedule:: 2022-11-17T15:07:42.144Z card-last-reviewed:: 2022-11-14T20:07:42.144Z card-last-score:: 5 -

Lock Benefits #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-18T00:00:00.000Z card-last-reviewed:: 2022-11-17T19:36:01.540Z card-last-score:: 1- Only one process can execute the critical section code at a time.

- When a process is finished (& calls

release()), another process can enter the critical section. - Achieves the requirements of mutual exclusion & progress for concurrent systems.

-

Lock Limitations #card

card-last-interval:: 0.98 card-repeats:: 2 card-ease-factor:: 2.36 card-next-schedule:: 2022-11-15T19:09:12.011Z card-last-reviewed:: 2022-11-14T20:09:12.011Z card-last-score:: 3- Acquiring a lock only blocks processes trying to acquire the same lock.

- Process may acquire other locks.

- ^^You must use the same lock for all critical sections accessing the same data (or resource).^^

- Acquiring a lock only blocks processes trying to acquire the same lock.

-

Hardware-Based Lock

- Processor has a special instruction called "test & set".

- Allows atomic read and update.

-

//c code for test and set behaviour bool test_and_set (bool *flag) { bool old = *flag; *flag = true; return old; }

- Processor has a special instruction called "test & set".

-

Hardware-Based Spinlock

-

struct lock { bool held; //initially FALSE } void acquire(lock) { while(test_and_set(&lock->held)) ; //just wait return; } void release(lock) { lock->held = FALSE; } -

Drawbacks of Spinlocks #card

card-last-interval:: 3.45 card-repeats:: 2 card-ease-factor:: 2.46 card-next-schedule:: 2022-11-18T06:09:40.073Z card-last-reviewed:: 2022-11-14T20:09:40.073Z card-last-score:: 3- Spinlocks are a form of busy waiting -> they burn CPU time.

- Once acquired, they are held until explicitly released.

- Inefficient if the lock is held for long periods.

- OS overhead of context switching.

- If the Process Scheduler makes processes sleep while the lock is held, all other processes use their CPU time to spin while the process with the lock make no progress.

-

-

Do locks give us sufficient safety? #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T20:24:13.793Z card-last-score:: 1- If you can demonstrate any cases in which the following properties do not hold, then the system is not correct.

-

- Check Safety Properties: These must always be true.

- Mutual Exclusion: Two processes must not interleave certain sequences of instructions.

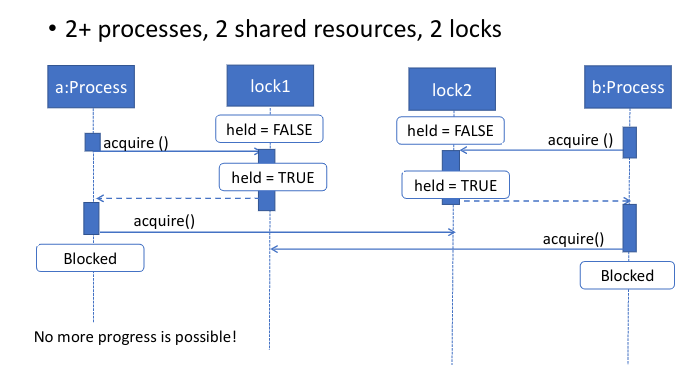

- Absence of Deadlock: Deadlock is when a non-terminating system cannot respond to any signal.

-

- Check Liveness Properties: These must eventually be true.

- Absence of Starvation: Information that is sent is delivered.

- Fairness: Any contention must be resolved.

-

- If you can demonstrate any cases in which the following properties do not hold, then the system is not correct.

-

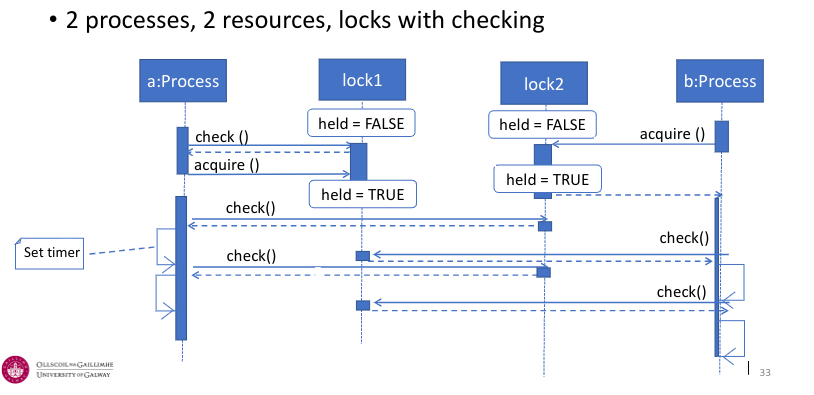

Lock Deadlock Scenario

-

Protocols to Avoid Deadlock #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T20:09:06.660Z card-last-score:: 1- Add a timer to the

lock.request()method.- Cancel the job & attempt it another time if it takes too long.

- Add a new

lock.check()method to see if a lock is already held before requesting it.- You can do something else and come back & check again.

- Avoid hold & wait protocol.

- Never hold onto one resource when you need two.

- Add a timer to the

-

Livelock by trying to avoid deadlock

-

Starvation

- What is Starvation? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-15T00:00:00.000Z

card-last-reviewed:: 2022-11-14T20:08:54.153Z

card-last-score:: 1

- Starvation is a more general case of livelock.

- One or more processes do not get run as another process is locking the resource.

- Starvation is a more general case of livelock.

- What is Starvation? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-15T00:00:00.000Z

card-last-reviewed:: 2022-11-14T20:08:54.153Z

card-last-score:: 1

-

Locks / Critical Sections & Reliability

- What if a process is interrupted, suspended, or crashes inside its critical section? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-15T00:00:00.000Z

card-last-reviewed:: 2022-11-14T16:17:34.955Z

card-last-score:: 1

- In the middle of the critical section, the system may be in an inconsistent state. The process is holding a lock, and if it dies, no other process waiting on that lock can proceed.

- Developers must ensure that critical regions are very short and always terminate.

- What if a process is interrupted, suspended, or crashes inside its critical section? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-15T00:00:00.000Z

card-last-reviewed:: 2022-11-14T16:17:34.955Z

card-last-score:: 1

-

Beyond Locks

- Locks only provide mutual exclusion.

- They ensure that only one process is in the critical section at a time.

- Locks are good for protecting our shared resource to prevent race conditions & avoid non-deterministic execution.

- What about fairness, avoiding starvation, & livelock?

- We need to be able to place an ordering on the scheduling of a process.

- Locks only provide mutual exclusion.

-

Semaphores

collapsed:: true- Example: We want to place an order on when processes execute.

background-color:: green

- Producer-Consumer:

- Producer: Creates a resource (data).

- Consumer: Uses a resource (data).

- E.g.:

ps | grep "gcc" | wc.

- E.g.:

- Don't want producers & consumers to operate in lockstep (i.e., atomicity).

- Each command must wait for the previous output.

- Implies lots of context switching (i.e., very expensive).

- ^^Solution: Place a fixed-size buffer between producers & consumers.^^

- ^^Synchronise access to buffer.^^

- ^^Producer waits if the buffer is full; the consumer waits if the buffer is empty.^^

- Producer-Consumer:

- What is a semaphore? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-15T00:00:00.000Z

card-last-reviewed:: 2022-11-14T15:50:12.454Z

card-last-score:: 1

- A semaphore is a higher-level synchronisation primitive.

- Semaphores are a kind of generalised lock.

- They are the main synchronisation primitive used in the original UNIX.

- Semaphores are implemented with a counter that is manipulated atomically via 2 operations: signal & wait.

wait(semaphore): AKAdown()orP().- Decrement counter.

- If counter is zero, then block until semaphore is signalled.

signal(semaphore): AKAup()orV().- Increment counter.

- Wake up one waiter, if any.

sem_init(semaphore, counter):- Set initial counter value.

-

Semaphore PseudoCode #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:13:51.708Z card-last-score:: 1wait()&signal()are critical sections.- Hence, they must be executed atomically with respect to each other.

- Each semaphore has an associated queue of processes.

- When

wait()is called by a process:- If the semaphore is available -> the process continues.

- If the semaphore is unavailable -> the process blocks, waits on queue.

signal()opens the semaphore.- If processes are waiting on a queue -> one process is unblocked.

- If no processes are on the queue -> the signal is remembered for the next time

wait()is called.

- Note: Blocking processes is not spinning - they release the CPU to do other work.

- When

-

struct semaphore { int value; queue L[]; // list of processes } - wait (S) { if (s.value > 0) { s.value--; } else { add this process to s.L; block; } } - signal(S) { if (S.L != EMPTY) { remove a process P from S.L; wakeup(P); } else { s.value++; } }

-

Semaphore Initialisation

- If the semaphore is initialised to

1: #card card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T15:52:48.262Z card-last-score:: 1- The first call to

waitgoes through -> The semaphore value goes from1to0. - The second call to

waitblocks -> The semaphore value stays at0, the process goes on the queue. - If the first process calls

signal()-> The semaphore value stays at0and wakes up the second process. - The semaphore acts like a mutex lock.

- We can use semaphores to implement locks.

- This is called a binary semaphore.

- The first call to

- If the semaphore is initialised to

2: #card card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T15:51:38.462Z card-last-score:: 1- The initial value of the semaphore = the number of processes that can be active at once.

sem_init(sem, 2):value = 2, L = [].

- Consider multiple processes:

- Process1:

wait(sem).value = 1, L = []-> P1 executes.

- Process2:

wait(sem).value = 0, L = []-> P2 executes.

- Process3:

wait(sem).value = 0, L = [P3]-> P3 blocks.

- Process1:

- The initial value of the semaphore = the number of processes that can be active at once.

- If the semaphore is initialised to

-

Counting Semaphores #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T15:52:26.139Z card-last-score:: 1- Allocating a number of resources.

- Shared buffers: each time you want to access a buffer, call

wait().- You are queued if there is no buffer available.

- Shared buffers: each time you want to access a buffer, call

- Counter is initialised to

N= number resources.- This is called a counting semaphore.

- Useful for conditional synchronisation.

- i.e., if one process is waiting for another process to finish a price of work before it continues.

- Allocating a number of resources.

-

Semaphores for Mutual Exclusion #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:15:56.292Z card-last-score:: 1- With semaphores, guaranteeing mutual exclusion for

Nprocesses is trivial. -

semaphore mutex = 1; void Process(int i) { while (1) { // Non-Critical Section Bit wait(mutex); // grab the mutual exclusion semaphore // Do the Critical Section Bit signal(mutex); } } int main() { cobegin { Process(1); Process(2); } }

- With semaphores, guaranteeing mutual exclusion for

-

Bounded Buffer Problem #card

collapsed:: true card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-19T00:00:00.000Z card-last-reviewed:: 2022-11-18T18:37:38.649Z card-last-score:: 1- Producer-Consumer Problem:

- Buffer in memory - finite size of

Nentries. - A producer process inserts an entry into the buffer.

- A consumer process removes an entry from the buffer.

- Buffer in memory - finite size of

- Processes are concurrent - we must use a synchronisation mechanism to control access to shared variables describing the buffer state.

-

Producer-Consumer Single Buffer

- Producer-Consumer Problem:

-

Semaphores Can Be Hard to Use #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T20:24:06.193Z card-last-score:: 1- Complex patterns of resource usage.

- Cannot capture relationships with semaphores alone.

- Need extra state variables to record information.

- Produce buggy code that is hard to write.

- E.g., if one coder forgets to do

V()/signal()after a critical section, the whole system can deadlock.

- E.g., if one coder forgets to do

- Complex patterns of resource usage.

- Example: We want to place an order on when processes execute.

background-color:: green

-

Monitors

collapsed:: true- What are Monitors? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-24T00:00:00.000Z

card-last-reviewed:: 2022-11-23T12:18:42.748Z

card-last-score:: 1

- Monitors are an extension of the monolithic monitor used in the OS to allocate memory.

- A programming language construct that supports controlled access to shared data.

- Synchronisation code added by compiler, enforced at runtime -> less work for programmer.

- Monitors can keep track of who is allowed to access the shared data and when they can do it.

- Monitors are a higher-level construct than semaphores.

- Monitors encapsulate:

- Shared data structures.

- Procedures that operate on shared data.

- Synchronisation between concurrent processes that invoke these procedures.

- Monitors are an extension of the monolithic monitor used in the OS to allocate memory.

- What are Monitors? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.5

card-next-schedule:: 2022-11-24T00:00:00.000Z

card-last-reviewed:: 2022-11-23T12:18:42.748Z

card-last-score:: 1

-

-

Detection & Protection of Deadlock

-

Requirements for Deadlock #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-19T00:00:00.000Z card-last-reviewed:: 2022-11-18T18:37:21.446Z card-last-score:: 1- All four conditions must hold for deadlock to occur:

-

- Mutex: At least one resource must be non-shareable.

- No Pre-Emption: Resources cannot be pre-empted (no way to break priority or take a resource away once allocated).

- Locks have this property.

-

- Hold & Wait: There is an existing process holding a resource and waiting for another resource.

- Circular Wait: There exists a set of process

P_1, P_2, \cdots, P_Nsuch thatP_1is waiting forP_2,P_2is waiting forP_3, ..., andP_Nis waiting forP_1.

-

- If only three conditions hold then you can get starvation, but not deadlock.

- All four conditions must hold for deadlock to occur:

-

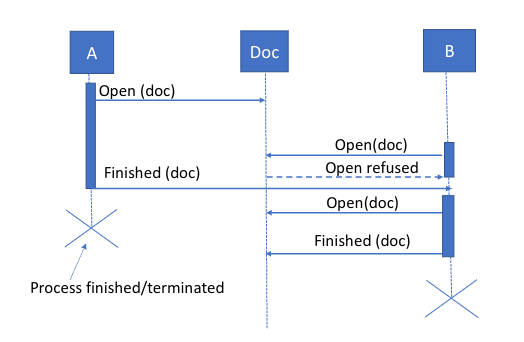

Deadlocks Without Locks #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:13:42.597Z card-last-score:: 1- Deadlocks can occur for any resource or any time a process waits, e.g.:

- Messages: waiting to receive a message before sending a message.

- i.e., hold & wait.

- Allocation: waiting to allocate resources before freeing another resource.

- i.e., hold & wait.

- Messages: waiting to receive a message before sending a message.

- Deadlocks can occur for any resource or any time a process waits, e.g.:

-

Dealing with Deadlocks #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-24T00:00:00.000Z card-last-reviewed:: 2022-11-23T12:16:50.624Z card-last-score:: 1-

Strategy 1: Ignore #card

collapsed:: true card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:14:57.281Z card-last-score:: 1- Ignore the fact that deadlocks may occur.

- Write code, put nothing special in.

- Sometimes you have to reboot the system.

- May work for some unimportant or simple applications where deadlock does not occur often.

- Quite a common approach.

-

Strategy 2: Reactive #card

collapsed:: true card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-19T00:00:00.000Z card-last-reviewed:: 2022-11-18T18:37:05.883Z card-last-score:: 1- Periodically check for evidence of a deadlock.

- E.g., add timeouts to acquiring a lock, if you timeout, then it implies that deadlock has occurred and you must do something.

- Recovery actions:

- Blue screen of death & reboot computer.

- Pick a process to terminate, e.g., a low-priority one.

- Only works with some types of applications.

- May corrupt data, so the process needs to do clean-up when terminated.

- Periodically check for evidence of a deadlock.

-

Strategy 3: Proactive #card

collapsed:: true card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:18:36.908Z card-last-score:: 1- Prevent one of the four necessary conditions for deadlock.

- No single approach is appropriate (or possible) for all circumstances.

- Need techniques for each of the four conditions.

-

Solution 1: No Mutual Exclusion

- Make resources shareable.

- E.g., read-only files - no need for locks.

-

Solution 2: Adding Pre-Emption

- Locks cannot be pre-empted but other pre-emptive methods are possible.

- Strategy: pre-empt resources.

- Example: If process

Ais waiting for a resource held by processB, then take the resource fromBand give it toA.

- Example: If process

- Problems:

- Only works for some resources

- E.g., CPU & Memory (using virtual memory).

- Not possible if a resource cannot be saved & restored.

- Otherwise, taking away a lock causes issues.

- Also, there is an overhead cost for "pre-empt" & "restore".

- Only works for some resources

-

Solution 3: Avoid Hold & Wait

- Only request a resource when you have none, i.e., release a resource before requesting another.

- Never hold

xwhen wanty.- Works in many cases, but you cannot maintain a relationship between

x&y.

- Works in many cases, but you cannot maintain a relationship between

- Never hold

- Acquire all resources at once, e.g., use a single lock to protect all data.

- Having fewer locks is called lock coarsening.

- Problem: All processes accessing

AorBcannot run at the same time, even if they don't access both variables.

- Only request a resource when you have none, i.e., release a resource before requesting another.

-

Solution 4: Eliminate Circular Waits

- Strategy: Impose an ordering on resources.

- Processes must acquire the highest-ranked resource first.

- Locks are always acquired in the same order.

- We have eliminated the circular dependency, but we will need to lock a resource for a longer period.

- Strategy: Define an ordering of all locks in your program.

- Always acquire locks in that order.

- Problem: Sometimes you do not know the order that the events will be used.

- How do we know the global order?

- Need extra code to find this out and then acquire them in the right order.

- It could get worse.

- Strategy: Impose an ordering on resources.

-

-