13 KiB

13 KiB

- #CT213 - Computer Systems & Organisation

- Previous Topic: Process Management

- Next Topic: Process Synchronisation

- Relevant Slides:

-

Scheduling

- What is scheduling? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.22

card-next-schedule:: 2022-11-18T00:00:00.000Z

card-last-reviewed:: 2022-11-17T09:47:50.266Z

card-last-score:: 1

- Scheduling allows one process to use the CPU while the execution of another process is on hold (i.e., in the waiting state) due to unavailability of any resource like I/O etc.

- It aims to make the system efficient, fast, & fair.

- It is part of the process manager.

- Scheduling is the mechanism that handles the ^^removal of the running processes from the CPU and the selection of another process.^^

- It is responsible for multiplexing processes in the CPU.

- When it is time for the running process to be removed from the CPU (into a ready or suspended state), a different process is selected from the set of processes in the ready state.

- The selection of another process is based on a particular strategy - the scheduling algorithm will determine the order in which the OS will execute the processes.

- When it is time for the running process to be removed from the CPU (into a ready or suspended state), a different process is selected from the set of processes in the ready state.

- Scheduling allows one process to use the CPU while the execution of another process is on hold (i.e., in the waiting state) due to unavailability of any resource like I/O etc.

-

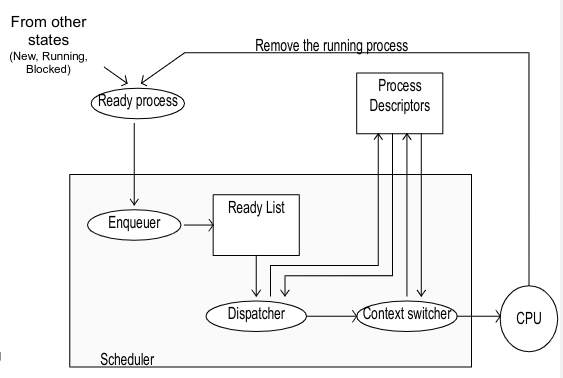

Scheduler Organisation #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:26:05.897Z card-last-score:: 1- When a process is changed to the ready state. the enqueuer places a pointer to the process descriptor into a ready list.

- Whenever the scheduler switches the CPU from executing one process to another, the context switcher saves the contents of all the processor registers of the process being removed into the process' descriptor.

- There are two types of context switch: Voluntary & Involuntary.

- The dispatcher is invoked after the current process has been from the CPU.

- The dispatcher chooses one of the processes enqueued in the ready list and then allocates CPU to that process by performing another context switch from itself to the selected process.

-

Scheduler Types

collapsed:: true- What are the two main types of scheduler? #card

card-last-interval:: 13.48

card-repeats:: 3

card-ease-factor:: 2.7

card-next-schedule:: 2022-11-28T03:39:05.248Z

card-last-reviewed:: 2022-11-14T16:39:05.248Z

card-last-score:: 5

- Cooperative Scheduler (Voluntary CPU Sharing).

- Preemptive Scheduler (Involuntary CPU Sharing).

-

Cooperative Scheduler (Voluntary CPU Sharing) #card

card-last-interval:: 9.28 card-repeats:: 3 card-ease-factor:: 2.32 card-next-schedule:: 2022-11-24T02:11:30.468Z card-last-reviewed:: 2022-11-14T20:11:30.468Z card-last-score:: 3- Each process will periodically invoke the process scheduler, voluntarily sharing the CPU.

- Each process should call a function that will implement the process scheduling.

\text{yield}(P_{current}, P_{next})(sometimes implemented as instruction in hardware), whereP_{current}is an identifier of the current process andP_{next}is an identifier of the next process.

- Cooperative multitasking allows much simpler implementation of applications, because their ^^execution is never unexpectedly interrupted by the process scheduler.^^

- Possible problem: If the process does not voluntarily cooperate with the others, one process could keep the CPU forever.

-

Preemptive Scheduler (Involuntary CPU Sharing) #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.22 card-next-schedule:: 2022-11-22T00:00:00.000Z card-last-reviewed:: 2022-11-21T13:11:05.756Z card-last-score:: 1- The interrupt system enforces periodic involuntary interruption of any process's execution; it can force a process to involuntarily execute a yield type function (or instruction).

- This is done by incorporating an interval timer device that produces an interrupt whenever the time expires.

- The programmable interval timer will cause an interrupt to run every

Kclock ticks of a time interval, thus causing the hardware to execute the logical equivalent of a yield instruction to invoke the interruption handler. - The interrupt handler for the timer interrupt will call the scheduler to reschedule the processor without any action on the part of the running process.

- The scheduler decides which process is run next.

- The scheduler is guaranteed to be invoked once every

Kclock ticks.- Even if a certain process executes in an infinite loop, it will not block the execution of the other processes.

- The interrupt system enforces periodic involuntary interruption of any process's execution; it can force a process to involuntarily execute a yield type function (or instruction).

- What are the two main types of scheduler? #card

card-last-interval:: 13.48

card-repeats:: 3

card-ease-factor:: 2.7

card-next-schedule:: 2022-11-28T03:39:05.248Z

card-last-reviewed:: 2022-11-14T16:39:05.248Z

card-last-score:: 5

-

Performance Elements

- Having a set of processes

P = \{p_i, 0 \leq i \leq n\}.- Service Time -

\tau(p_i): The amount of time that a process needs to spend in the active/running state before it completes. - Wait Time -

W(p_i): The time that the process spends waiting in the ready state before its first transition to the active state. - Turn-around Time -

T_{TRnd}(p_i): The amount of time between the moment that a process enters the ready state and the moment that the process exits the running state for the last time.

- Service Time -

- These elements are used to measure the performance of each scheduling algorithm.

- Having a set of processes

-

Selection Strategies #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T20:13:18.970Z card-last-score:: 1-

Non-Preemptive Strategies #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.7 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:49:49.983Z card-last-score:: 1- ==Allow any process to run to completion once it has been allocated control of the CPU.==

- A process that gets the control of the CPU releases the CPU whenever it ends or when it voluntarily gives up control of the CPU.

-

Preemptive Strategies #card

card-last-interval:: 4 card-repeats:: 2 card-ease-factor:: 2.22 card-next-schedule:: 2022-11-25T13:08:54.894Z card-last-reviewed:: 2022-11-21T13:08:54.895Z card-last-score:: 3- ==The process with the highest priority among all the ready process is allocated the CPU.==

- ==All lower priority processes are made to yield to the highest priority process whenever it requests the CPU.==

- The scheduler is called every time a process enters the ready queue as well as when an interval timer expires.

- Preemptive strategies allow for equitable resource sharing among processes, at the expense of overloading the system.

- ==The process with the highest priority among all the ready process is allocated the CPU.==

-

-

Scheduling Algorithms

-

First Come, First Served (FCFS) #card

card-last-interval:: 8.32 card-repeats:: 3 card-ease-factor:: 2.08 card-next-schedule:: 2022-11-23T03:12:10.431Z card-last-reviewed:: 2022-11-14T20:12:10.431Z card-last-score:: 3- Non-preemptive algorithm.

- This scheduling strategy assigns priority to processes by the order in which they request the processor.

- The priority of a process is computed by the enqueuer by time stamping all incoming processes and then having the dispatcher select the process that has the ^^oidest time stamp.^^

- Possible implementation: Using a FIFO data structure (where each entry points to a process descriptor). The enqueuer adds processes to the tail of the queue and the dispatcher removes processes from the head of the queue.

- Easy to implement.

- Not widely used because of ^^unpredictable turn-around time & waiting time.^^

-

Shortest Job First (SJF) #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.46 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T20:19:28.311Z card-last-score:: 1- Non-preemptive algorithm.

- SJF is an optimal algorithm from the perspective of average turn-around time - it minimises the average turn-around time.

- Preferential service of small jobs.

- Requires the ^^knowledge of the service time^^ for each process.

- In extreme cases where the system has little idle time, processes with large service time will never be served.

- In the case where it is not possible to know the service time for each process, the service time is estimated using predictors.

-

Shortest Remaining Time Next (SRTN) #card

card-last-interval:: 9.28 card-repeats:: 3 card-ease-factor:: 2.32 card-next-schedule:: 2022-11-24T02:11:11.684Z card-last-reviewed:: 2022-11-14T20:11:11.685Z card-last-score:: 3- Similar to SJF, but preemptive.

- If a long job is mostly complete, it might have a very short time remaining, and therefore would be prioritised.

- Similar to SJF, but preemptive.

-

Time Slice (Round Robin) #card

card-last-interval:: 8.32 card-repeats:: 3 card-ease-factor:: 2.08 card-next-schedule:: 2022-11-23T03:11:54.075Z card-last-reviewed:: 2022-11-14T20:11:54.076Z card-last-score:: 3- Preemptive algorithm.

- Each process gets a time slice of CPU time, distributing the processing time equitably among all processes that are requesting the processor.

- Whenever the time slice expires, control of the CPU is given to the next process in the ready list, and the process being switched from is placed back into the ready process list.

- Time Slice implies the existence of a specialised timer that measures the processor time for each process.

- Every time a process becomes active, the timer is intitialised.

- Not very well suited for long jobs, as the scheduler will be called multiple times until the job is done.

- Very sensitive to the size of the time slice.

- Too big -> large delays in the response time for interactive processes.

- Too small -> too much time spent running the scheduler.

- Very big -> turns into FCFS.

- The time slice is determined by analysing the number of instructions that the processor can execute in a given time slice.

-

Priority-Based Preemptive Scheduling (Event Driven) #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:28:05.394Z card-last-score:: 1- Both preemptive & non-preemptive variants exist.

- Each process has an ^^externally assigned priority.^^

- Every time an event occurs that generates a process switch, the ^^process with the highest priority^^ is chosen from the ready process list.

- There is a possibility that processes with low priority will never gain CPU time.

- There are variants with static & dynamic priorities.

- The dynamic priority computation solves the problem that some processes may never gain CPU time - the longer a process waits, the higher its priority becomes.

- Used for real-time systems.

-

Multiple Level Queue Scheduling #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.6 card-next-schedule:: 2022-11-22T00:00:00.000Z card-last-reviewed:: 2022-11-21T13:11:11.306Z card-last-score:: 1- Complex systems have requirements for real-time, interactive users and batch jobs - Therefore, a combined scheduling mechanism should be used.

- The processes are divided into classes.

- Each class has a process queue, and has been assigned a specific scheduling algorithm.

- Each process queue is treated according to its queue scheduling algorithm.

- Each queue is assigned a priority.

- As long as there are processes in a higher priority queue, those will be serviced.

- Each process queue is treated according to its queue scheduling algorithm.

- Each class has a process queue, and has been assigned a specific scheduling algorithm.

-

Multiple Level Queue (with Feedback) #card

card-last-interval:: -1 card-repeats:: 1 card-ease-factor:: 2.5 card-next-schedule:: 2022-11-15T00:00:00.000Z card-last-reviewed:: 2022-11-14T16:27:34.399Z card-last-score:: 1- Same as MLQ, but the ^^processes can migrate from class to class^^ in a dynamic fashion.

- Different strategies exist to modify the priority of a process.

- Increase the priority for a given process. (E.g., the user needs a larger share of the CPU to sustain acceptable service).

- Decrease the priority for a given process. (E.g., the user process is trying to get more CPU share, which may impact on the other users).

- If a process is giving up the CPU before its time slice expires, then the process is assigned to a higher priority queue.

- During the evolution to completion, a process may go through a number of different classes.

- Any of the previous algorithms covered may be used for treating a specific process class.

-

- What is scheduling? #card

card-last-interval:: -1

card-repeats:: 1

card-ease-factor:: 2.22

card-next-schedule:: 2022-11-18T00:00:00.000Z

card-last-reviewed:: 2022-11-17T09:47:50.266Z

card-last-score:: 1